My adventures in infosec. Pentesting, red teaming and the rest of it.

Bypassing CloudFlare

Recently I found myself up against CloudFlare. I was able to sidestep it during the engagement and then get SQLMap working. If you are short on time here is the technique in a nutshell: I hope that gets you where you needed to go. If you have the time, then context is often entertaining. Why,…

Keep reading

Can’t crack NTLMV2 hash caught by Responder, what next?

It finally happened: you have used responder to capture hashes but failed to crack them. This post covers one more way you can use Responder to gain access anyway. Just Give Me the Steps If you are time poor here is just the steps: In this configuration NTLMRelayX will relay any NTLMv2 hashes it receives…

Keep reading

Reverse shell over UDP using PowerShell

My post is really to remind myself that this exists. The hard work was done on labofapenetrationtester.com back in 2015. I found that this worked for me well. First the code from that blog (only slightly modified): Note 1: if you use the code from the git repository it will be caught by Windows Defender…

Keep reading

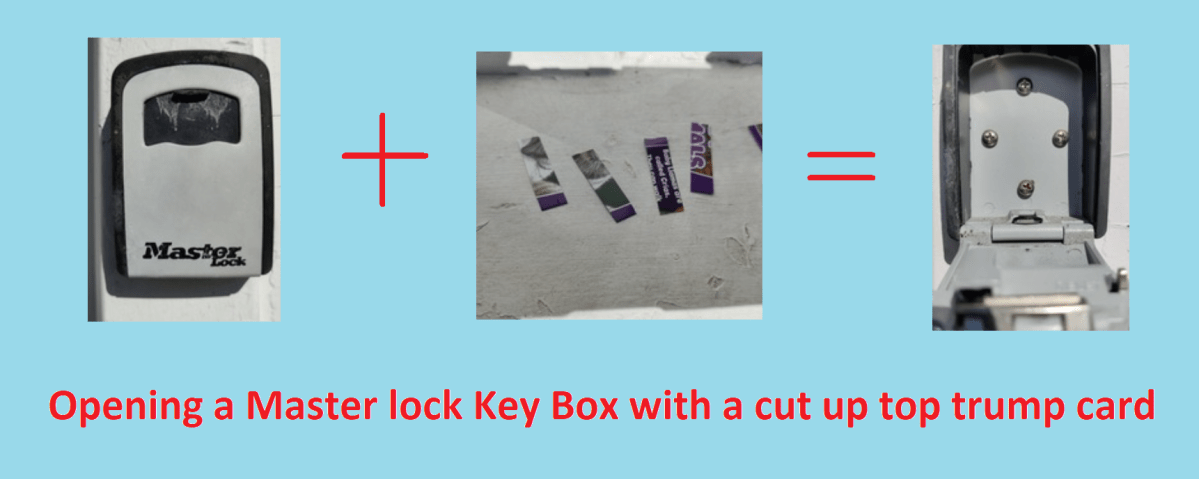

Beating a Master Lock (key box)

I moved house a while back and the place came with one of those Air BnB style key boxes on the front made by Master Lock. There was nothing in the handover about it and it was likely that it had belonged to the previous previous owners as it looked unloved for a long time.…

Keep reading

Finding Missing Patches the hard way

In this post I present a short piece of PowerShell that helped me find missing patches in a .net application. The target was a thick client where source code was not provided. Almost everything has outdated dependencies and the goal for me is to see if any of them will provide an obvious way to…

Keep readingSomething went wrong. Please refresh the page and/or try again.